Data scientists spend 80% of their time cleaning data while the 20% remaining is dedicated to generating insights.

80/20 Data Rule

Data is the obvious foundation of a data-driven company. But unless you have timely, relevant, and trustworthy data, the decision-makers in your organization will often be left with no alternative other than to keep taking decisions using solely their intuition. Hence, data quality is key nowadays.

However, data quality is not just important for the decision-makers. For any data analyst, getting their hands dirty creating insights, it is essential to have the right data – collected in the right manner, in the right form, in the right place, and at the right time. If any of these aspects are lacking or missing, they are very limited in the questions that they can answer and the quality of the insights that they will generate from the data.

The aim of this article is to give a brief overview of the acknowledged ways that render data reliable, while also highlighting other ways in which it can be unreliable and providing some tips that can help you to mitigate such scenarios.

What are the facets of data quality?

Unfortunately, data quality is not something that it is numerically quantifiable. Quality is not a five or an eight. When it comes to data quality, some issues are relatively more serious than others. The severity of those issues will depend on the context of the analysis to be performed with the data. To understand this better, we need to determine what are the attributes of a high-standard dataset or data source.

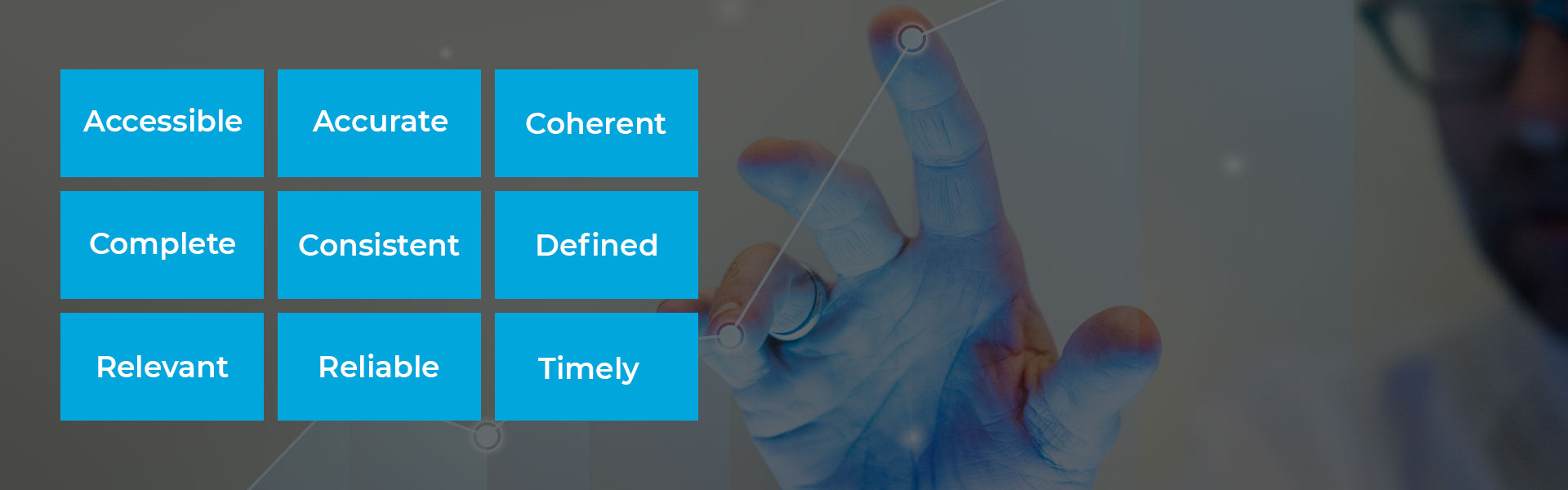

The attributes are shown in the image below. Any issue in just one of these headings can render your data useless, partially useless, or worst of all: seemingly usable but misleading.

It is important to be aware of those factors when working with any data to ensure you are able to acknowledge and identify any wrong data insights or conclusions that can potentially have an adverse effect on your business. In the next part of this article, I will be describing some of the processes and most common processes that drive down data quality, as well as some approaches you can apply to detect and mitigate those issues.

Common situations in which your data can go down the drain… and how to avoid them

Let’s admit it… data can go bad in a lot of different ways and at every step of the data collection process: starting from its generation and ending in its analysis. And sadly, this might have huge negative repercussions for your organisation. According to one study conducted in the US, dirty or poor data quality costs businesses $600 billion annually (that’s 3.5% of the American GDP!).

This reinforces the importance of this part of the article; going through the most common data quality problems and pitfalls and giving some brief tips on how to identify and correct them before they become problematic.

- Data generation is the most upstream source of issues and can arise from errors in hardware (sensors), software (bugs), and wetware (humans).

In hardware, data gathered from sensors can be miscalibrated or uncalibrated, leading to inaccurate results; for example, a temperature sensor can misread a temperature of 40°C when in reality it may be 35°C. In those instances, one way to fix this is calibrating it against another source of truth during the setup.

When it comes to the human factor, the challenge is bigger. Unfortunately, the ways of introducing errors in the collection process are countless; not knowing how to use the equipment properly, not following the protocols, being in a rush, or just sloppy …. And I could go on. To try to mitigate this as much as possible there are two actions you can take: define clear, simple, and understandable protocols, and provide all the training necessary to fix any issues.

- Data entry – Having read the previous paragraph, the words ‘data entry’ by themselves are likely to bring about a clear idea to most readers of the potential disasters that could occur at this stage. When data is generated, it must be recorded in some sort of computer; the biggest problem is that in some instances, there is an intermediate step where the data is recorded on paper forms. Think about how the healthcare system works. In fact, in this area, we can find a lot of research on the impact of data quality. In one of those studies, it was found that 46% of medication errors were attributed to transcription mistakes!

How can this be mitigated? Well, first we need to try to remove human intervention as much as possible and have data collected and stored automatically whenever possible. Reducing the number of steps from data generation to input or adding field validation in case of electronic forms will help you to prevent the oft-quoted ‘garbage in, garbage out’ at the source.

- Missing data – This is one of the most significant issues with data, either through being either incomplete or missing. It can be manifested in two ways: either data within a record or wholly missing records. The type of missingness is critical, and you will need to investigate further whether the data is:

-

- Missing completely at random

- Missing at random due to some sampling function that is not optimally defined (i.e.. Some records may be missing intentionally, but there are other records that are missing unintentionally)

- Not missing at random – very frequent in surveys leading to significant bias.

Recognizing and understanding any biases will allow you to size the impact on the quality of your data and help you to come up with actions to mitigate it.

- Duplicates – Having the same record multiple times is a very frequent problem, particularly when you are dealing with data stored in a database. Analysts need to be very careful with this type of problem as it can often degrade data quality. In this situation, my advice is to run some quality checks before starting to build any reports and come up with any conclusions. The best way to avoid duplicates is just to add constraints or manipulations from the source to remove them.

- Truncated data – This is another problem that is generated at a database level. This usually happens in relational databases when creating a table where you need to specify each field name and type. For example, Customer ID will be an integer with 4 characters. What happens should you reach your 10000th customer? At this stage, the maximum integer length of the values you will encounter when ingesting the data will not always be clear in advance. One way to prevent this situation is to set your database to strict mode so that warnings become full-blown errors that you can easily catch.

- Units – Last but not least: inconsistency in units. This is something very frequent in international teams and datasets, the classic example is having a sales table where the revenue is inputted in different currencies. An easy way to reduce this problem is communication. Have a requirements document that sets out how items are measured, recorded, and their units, and accompany it with a detailed dictionary. With more metadata and context available, it gets easier to reduce ambiguities.

A good data lineage will avoid errors and improve your data quality

When data quality issues are found, it is crucial to trace them back to their origin. That way, the whole subset of data can be removed from the analysis, or better processes can be devised and put in place to remedy the problem. Metadata that stores the origin and change history of data is known as data lineage, and there are mainly two classes: one that tracks where data comes from and one that tracks changes made to the data. Having this type of information in hand will make your life easier when you have any data quality issues. It will help you to easily identify where the problem lies. Having a clear strategy in maintaining the data lineage documentation will not only have a positive impact on your data quality but also help in reducing the time needed to deal with any data issues you encounter.

Data quality is a shared responsibility across your organization

Having gone through the most common causes of poor data quality, you can appreciate that this is a problem that cannot be left to the technical guys within the IT department. This is something that needs to be shared across your organization: from the employees imputing information in systems to the engineering team dealing with the databases or the analysts coming up with insights. Communication, training, and having the right policies and procedures in place will create awareness across departments of the importance of good data quality.

Do you often encounter data quality issues in your business? Would you like to improve not only your data quality but also, set up the right processes and data lineage? If you want to learn more about this topic and how we can help you to layout strong foundations towards building a data-driven organization reach out to us at sales@iMovo.com. Our expert advisors can help you pave the way in your data journey. Reach out and request a free consultation with one of our advisors.